Zero-shot, few-shot, and chain-of-thought prompting techniques are methods used to guide large language models to perform tasks with minimal or no task-specific training.

Zero-shot prompting relies on well-crafted instructions to solve a task without any examples. Few-shot prompting provides a small number of examples within the prompt to help the model infer the task pattern.

Chain-of-thought prompting encourages the model to generate intermediate reasoning steps before producing the final answer, improving performance on complex reasoning and problem-solving tasks.

Core Prompting Paradigms

Prompting paradigms define how we instruct LLMs to reason and generate outputs.

These methods leverage the model's pre-trained knowledge, scaling performance with minimal data. Mastering them empowers you to build robust generative AI applications, such as sentiment analysis tools or code generators for Django backends.

Zero-Shot Prompting: Instruction Without Examples

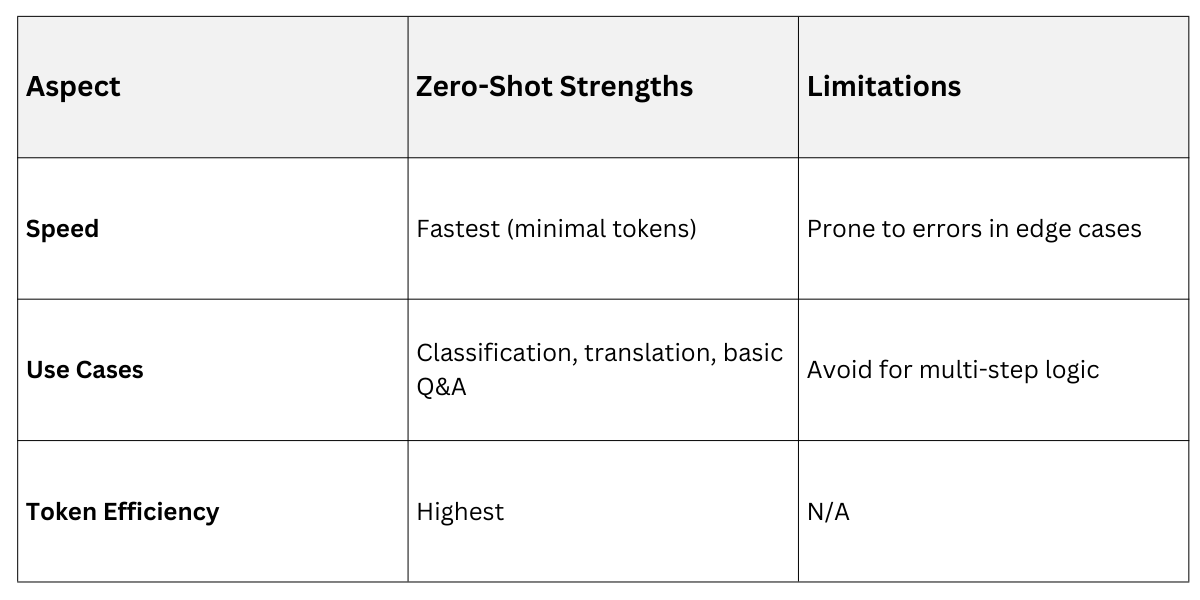

Zero-shot prompting instructs the model directly on a task without providing any examples, relying solely on its vast pre-training to infer the desired output. It's the simplest and fastest technique, ideal for straightforward tasks where the model already generalizes well.

This approach shines in production environments, like classifying user queries in a FastAPI web app, minimizing latency and token usage

Consider a data science scenario: You're building a customer support chatbot for an e-commerce site. A zero-shot prompt might look like this:

Classify the sentiment of this review as positive, negative, or neutral: "The delivery was quick, but the product arrived damaged."The model responds: Negative.

Key Advantages

1. Extremely low computational cost no examples mean shorter prompts.

2. Broad applicability to unseen tasks, such as summarizing news articles or extracting entities from text.

3. Best for high-volume inference, like real-time web API responses.

However, it falters on complex reasoning or domain-specific nuances. For instance, in a machine learning pipeline, zero-shot might misclassify ambiguous healthcare data without context.

In practice, combine zero-shot with temperature settings (e.g., 0.1 for deterministic outputs) in libraries like Hugging Face Transformers for reliable web deployments.

Few-Shot Prompting: Learning from Examples

Few-shot prompting provides a handful of input-output examples in the prompt to "teach" the model the pattern, enabling adaptation to new tasks without fine-tuning.

Typically using 1-10 examples, it bridges the gap between zero-shot simplicity and full training data requirements.

This technique excels when zero-shot underperforms but you lack resources for fine-tuning, common in iterative course content generation or prototyping ML models.

Here's a practical example for generating Python code snippets in a data science tutorial:

Example 1:

Input: Write a function to calculate mean of [1,2,3]

Output: def calculate_mean(numbers): return sum(numbers)/len(numbers)

Example 2:

Input: Write a function to find max of [4,5,1]

Output: def find_max(numbers): return max(numbers)

Input: Write a function to sort [3,1,2]

Output:Model output: def sort_list(numbers): return sorted(numbers)

1. Select diverse examples: Choose 2-5 that cover variations, like edge cases in your web dev projects (e.g., empty lists).

2. Format consistently: Use clear delimiters like "Input:" and "Output:" for parseability.

3. Order matters: Place the most relevant example first to prime the model.

4. Scale judiciously: More examples improve accuracy but increase costs—test with tools like LangChain.

Real-World Wins

1. Style transfer for frontend CSS generation.

2. Few-shot classification in Scikit-learn pipelines, boosting accuracy by 10-20% over zero-shot.

Advanced Reasoning Techniques

Building on basic paradigms, advanced methods enhance logical inference. Here, we dive into chain-of-thought (CoT) and its variants, crucial for architecting AI systems handling complex queries.

These build reasoning scaffolds, mimicking human step-by-step thinking to unlock emergent capabilities in larger models.

Chain-of-Thought Prompting: Step-by-Step Reasoning

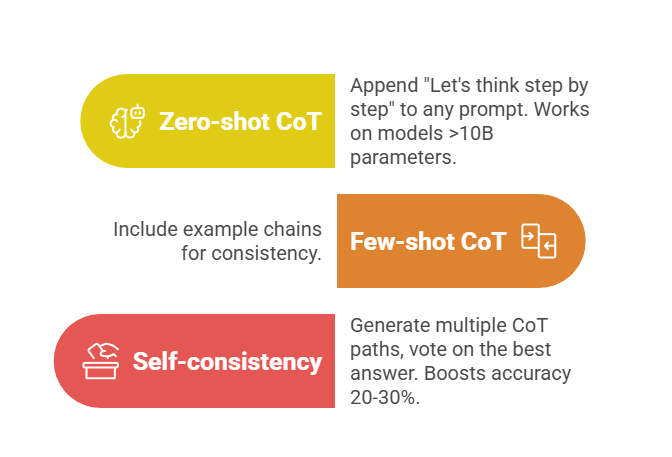

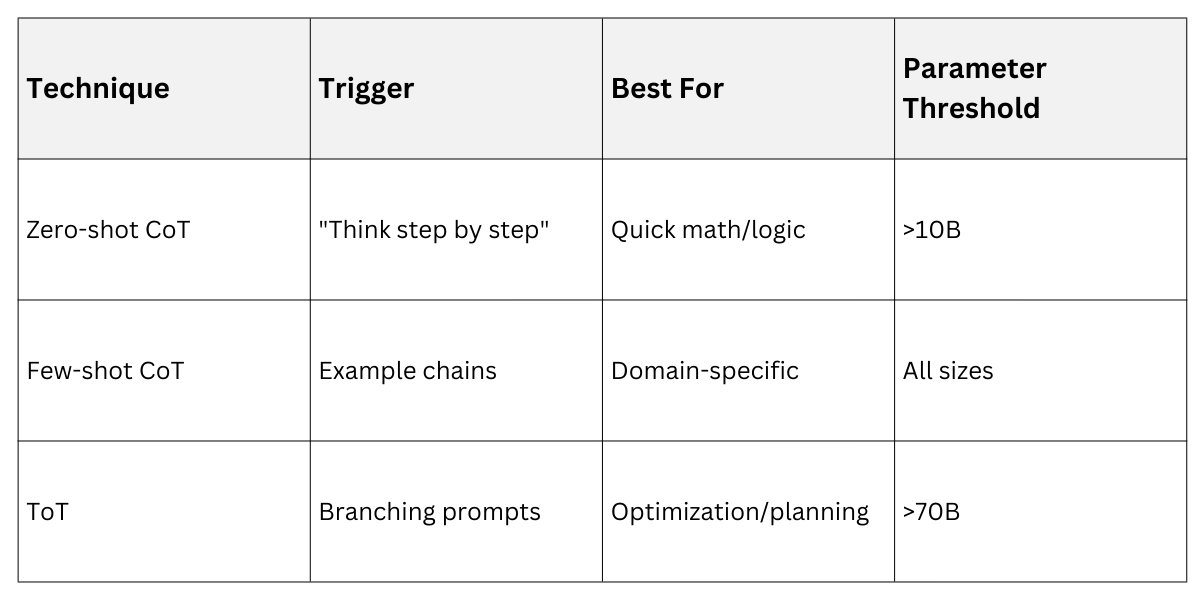

Chain-of-thought (CoT) prompting encourages the model to break down problems into intermediate reasoning steps before final answers, dramatically improving performance on arithmetic, commonsense, and symbolic tasks. Introduced in 2022 research, it elicits "thinking" chains implicitly or explicitly.

CoT is transformative for data science workflows, like debugging PyTorch models or optimizing query generation in SQL-for-AI tools.

Prompt example for a web analytics task

Q: A site has 1000 visitors on day 1, growing 20% daily. How many on day 5?

Let's think step by step:Model

Day 1: 1000

Day 2: 1000 * 1.2 = 1200

Day 3: 1200 * 1.2 = 1440

Day 4: 1440 * 1.2 = 1728

Day 5: 1728 * 1.2 = 2073.6 ≈ 2074 visitors.Variants

1. Explicit CoT: Spell out steps in prompt.

2. Tree-of-Thoughts (ToT): Branch explorations for planning (advanced extension).

3. Evaluate chains: Use another LLM to score reasoning quality.

In your FastAPI apps, integrate CoT via OpenAI API for dynamic A/B testing recommendations

prompt = """

Analyze these metrics: CTR=0.05, Conversion=0.02. Suggest improvements.

Think step by step.

"""

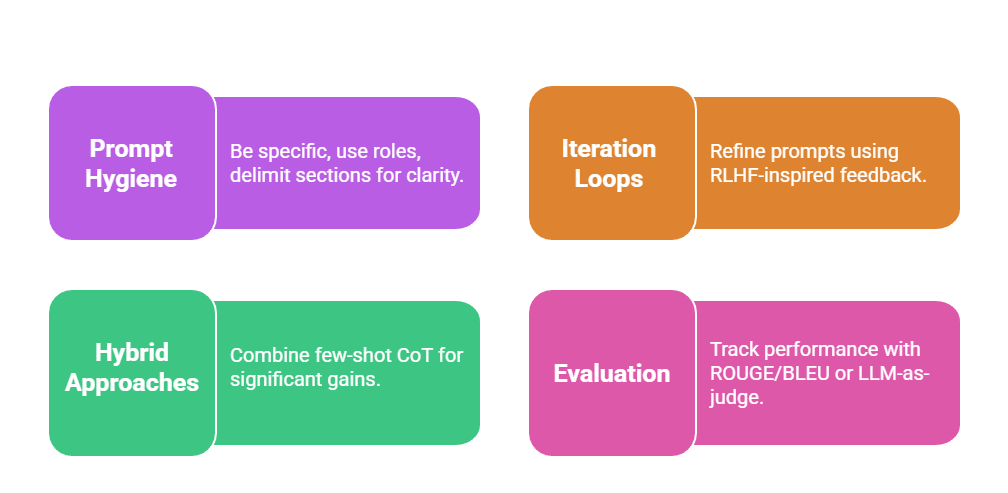

Guidelines from Google's PaLM 2 Playbook

1. Start with zero-shot; escalate to few-shot/CoT if accuracy <80%.

2. For web-scale: Cache few-shot prompts in vector DBs like Pinecone.

Practical Tip: In Jupyter notebooks, A/B test prompts using Weights & Biases for data science courses.

Best Practices and Implementation Tips

To maximize these techniques, follow evidence-based strategies tailored for production.

Code snippet for FastAPI integration

from openai import OpenAI

client = OpenAI()

def prompt_model(task: str, technique: str = "zero-shot"):

if technique == "cot":

prompt = f"{task}\nLet's think step by step."

# Add few-shot examples here

response = client.chat.completions.create(model="gpt-4o", messages=[{"role": "user", "content": prompt}])

return response.choices[0].message.contentClass Sessions

Sales Campaign

We have a sales campaign on our promoted courses and products. You can purchase 1 products at a discounted price up to 15% discount.