As large language models tackle increasingly complex reasoning tasks, advanced prompting strategies have emerged to improve accuracy, interpretability, and robustness.

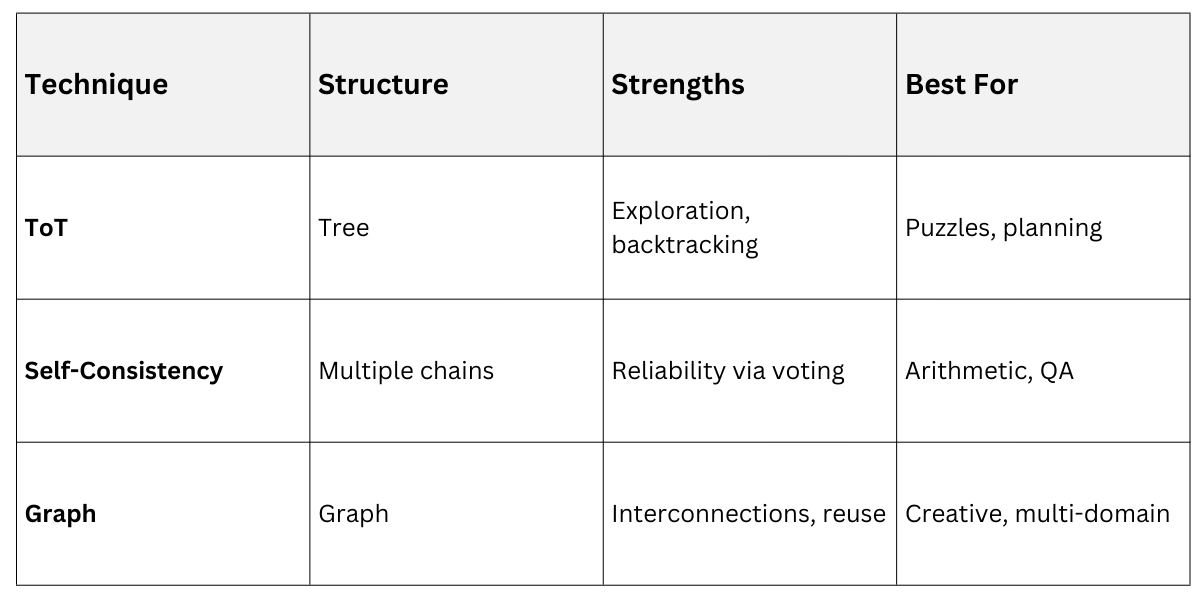

Tree-of-Thoughts, Graph Prompting, and Self-Consistency methods extend beyond linear chain-of-thought reasoning by exploring multiple reasoning paths, structured relationships, and consensus-based inference.

These approaches are especially useful for problem-solving, planning, and multi-step decision-making tasks.

Tree-of-Thoughts Prompting

Tree-of-thoughts (ToT) prompting extends chain-of-thought by organizing reasoning into a tree structure, where models explore multiple paths before converging on solutions.

This approach shines in tasks needing lookahead and backtracking, like puzzles or strategic planning.

Introduced in 2023 research, ToT treats coherent text units as "thoughts" at tree nodes, allowing large language models to self-evaluate and prune ineffective branches.

Unlike linear chains, it enables deliberate decision-making, significantly improving performance—for instance, lifting GPT-4's success rate from 4% to 74% on the Game of 24 puzzle.

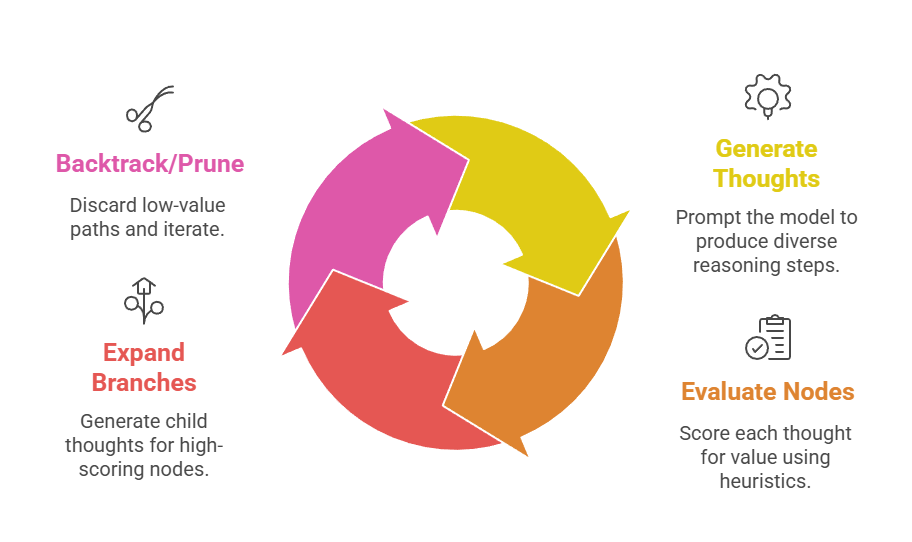

Core Process

Follow these steps to implement ToT:

A practical example is solving a creative writing task: Start with "Brainstorm three plot twists for a sci-fi story," evaluate for originality, then expand the best into full scenes.

Example Prompt Snippet

Problem: Solve Game of 24 using numbers 4, 4, 4, 4.

Thought 1: 4*4=16, then 16+4=20, 20+4=24. Value: High.

Expand: Confirm operations...This structure fosters exploration without exhaustive search.

Self-Consistency Methods

Self-consistency enhances chain-of-thought by generating multiple reasoning paths and selecting the most frequent answer, leveraging model stochasticity for reliability. It addresses greedy decoding flaws, where a single path leads to errors.

Developed in 2022 and published at ICLR 2023, this method samples diverse chains from the same prompt, then marginalizes to the consistent final answer—boosting arithmetic benchmarks like GSM8K by 17.9%.

Implementation Steps

1. Craft a chain-of-thought prompt with few-shot examples.

2. Sample 10-40 reasoning paths via temperature >0.

3. Extract final answers and take majority vote.

Consider the Age Riddle: "When I was 6, my sister was half my age. Now I’m 70, how old is she?" Multiple chains yield 67 (correct) more often than 35, as voting filters inconsistencies.

Graph Prompting

Graph prompting, or Graph-of-Thoughts (GoT), models reasoning as a graph with nodes as thoughts and edges as relationships like aggregation or refinement, capturing human thought's non-linearity. It surpasses trees by allowing cycles and merges for complex interconnections.

Emerging from 2023-2025 research, GoT supports operations like combining thoughts (e.g., averaging values) or looping refinements, ideal for tasks like multi-faceted analysis or recommendation systems.

Key Features

1. Non-linear paths: Thoughts connect bidirectionally, unlike ToT branches.

2. Dynamic ops: Merge (combine nodes), refine (improve via loops), or aggregate (e.g., vote).

3. Energy efficiency: Reuses nodes to cut API calls.

In molecular design, nodes represent property predictions; edges merge for optimized compounds. Example: For logistics, node A (route 1 cost), B (route 2), edge merges into optimal hybrid.

Practical Applications and Best Practices

Practical Applications and Best Practices

These methods integrate into generative AI pipelines for superior outcomes. Combine them—for example, use ToT to generate graph nodes, then self-consistency for finals.

1. Game solving: ToT excels in 24-point puzzles.

2. Commonsense QA: Self-consistency lifts ARC-challenge by 3.9%.

3. Creative tasks: Graph prompting aids story generation with merged ideas.

Best Practices

.png)

In Python-FastAPI apps, chain API calls for tree/graph traversal, storing states in memory for efficiency.